A major cable/satellite TV provider approached us to implement a new video service as an expansion to their emerging home security product line. The service would allow customers to remotely view video from their home security system on web and mobile devices.

A major cable/satellite TV provider approached us to implement a new video service as an expansion to their emerging home security product line. The service would allow customers to remotely view video from their home security system on web and mobile devices.

Home Security Video Service Development

The solution required a scalable video cloud service to ingest, manage, and playout videos and associated event metadata to web and mobile clients. Customers of the service would receive notifications of motion, doors opening, and other security events though mobile services, and then login to view video from their home security system. A navigation bar would allow customers to quickly scan to events of interest in the video feed.

Video Solution Requirements for Security and Cloud Access

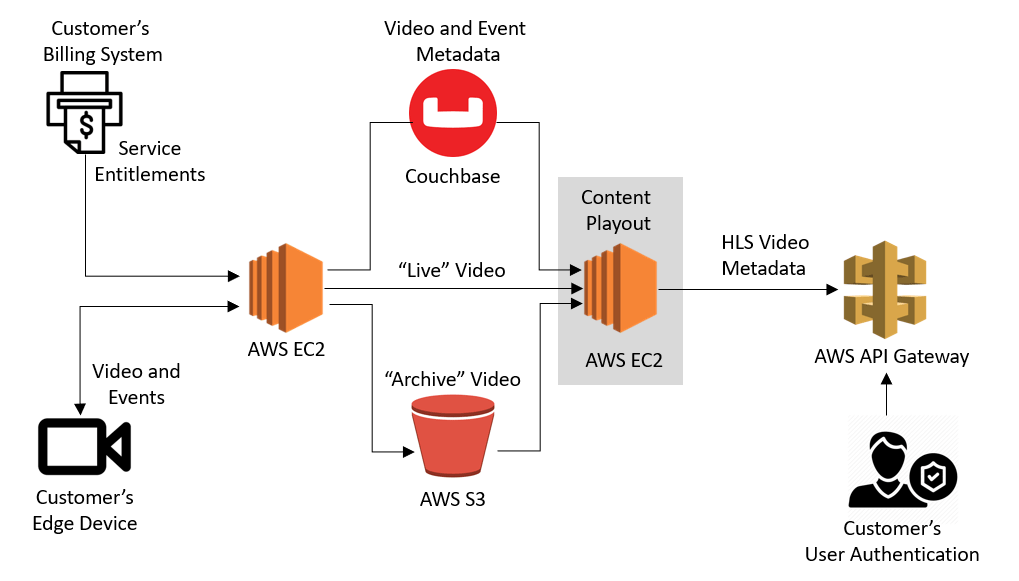

Our client had several components we needed to work with, including 1) a predefined video streaming specification for their edge devices, 2) APIs for user authentication and 3) validation of service entitlement with the billing system. They also wished to initially deploy the new video service on the AWS cloud, then migrate to their own data center once it was provisioned. This was an exciting opportunity for us to build, integrate, and deliver a scalable video infrastructure! Given our AWS partnership and long history in video, we were well suited to take on this development effort.

We transformed how a major cable/satellite TV provider’s customers interact with their home security systems, delivering remote video access directly to their devices while minimizing operating costs.

In order to be portable to our customer’s data center, and keep operating cost as low as possible, our engineering team designed a scalable EC2/CentOS oriented infrastructure paired with S3 storage as the primary backbone. Our high-level architecture looked like this.

High-Level Architecture for the Home Security Video System

Challenges with the Video System Development

We had three interesting challenges on this project driven by the customer’s choice of Websockets for their route-ability and two-way communication between the edge devices and server.

Scaling Cloud-Connected Devices

Our first challenge was scaling always-connected devices in such a way that we maintained a small number of EC2 instances so we could keep operating costs down. We achieved this goal by forcing a device to reconnect periodically. This allowed us to effectively compact the number of active devices onto a smaller group of EC2 instances and optimize our utilization of each EC2 instance via load balancers.

Storing Video Content

Our second challenge was one of cost-effective content storage. Video arrived in chunks over Websockets. S3 charges by the write. Pulling data off a Websocket and pushing it to S3 in small chunks is extremely expensive. Our solution was to stream the video to EC2 attached storage until the maximum S3 transfer size was met, then push to S3. This minimized the S3 write costs.

Reducing Video Delays

This cost saving strategy led to our third challenge. We ended up caching several seconds of video feed on the EC2 instances before transferring the data to S3. If a user logged in right away after an event notification to review video; a plausible use case for security video, and we just streamed from S3 then the client would see greatly delayed content. The delay could be nearly a minute considering edge to server, server to S3, and HLS delays. We worked with our client to determine the minimum lag time acceptable to their customers. We considered options with both development and operations costs in mind that met their requirements. We presented two potential solutions with cost and schedule estimates. One option was to buffer less data on EC2 and thereby guarantee the video in S3 would never exceed the acceptable lag time. The second option was to implement a more complex solution in which we pulled data from EC2 instances directly, then switched to S3 if the user scanned back to older video. We presented these solutions to our customer with the recommendation that we implement the more complex EC2 streaming solution as the engineering cost was quickly dwarfed by S3 transfer costs in operation. The customer agreed and we implemented an EC2 and S3 HLS streaming solution.

Web-Based System Dashboards

While not depicted in the figure, we also provided a skinnable browser based video player and navigator, and integrated our APIs with the client’s mobile development team to provide end user video viewing and navigation. We also provided a web-based admin console to manage the system and see status, as well as centralized logging for troubleshooting.

Results for the Cloud Video Solution

After completing integration with the customer’s user authentication, and billing systems the product was launched and ran successfully until changing business strategies deprecated the product.

Learn more about Cardinal Peak’s video product design services, streaming media service development and physical security product engineering, or reach out to our video system design experts.