These days, we employ audio and video applications in everything from IoT to embedded products, and one challenge that comes up time and time again is synchronization. Synchronization between audio and video streams is the most common case, but some applications require synchronization between multiple A/V streams, the latter being much more complicated. For the purposes of this two-part blog post, we’ll consider the basic case of synchronizing a single audio and video stream, today talking about why we need to synchronize, and later this week talking about how we do it.

Why do we need to synchronize?

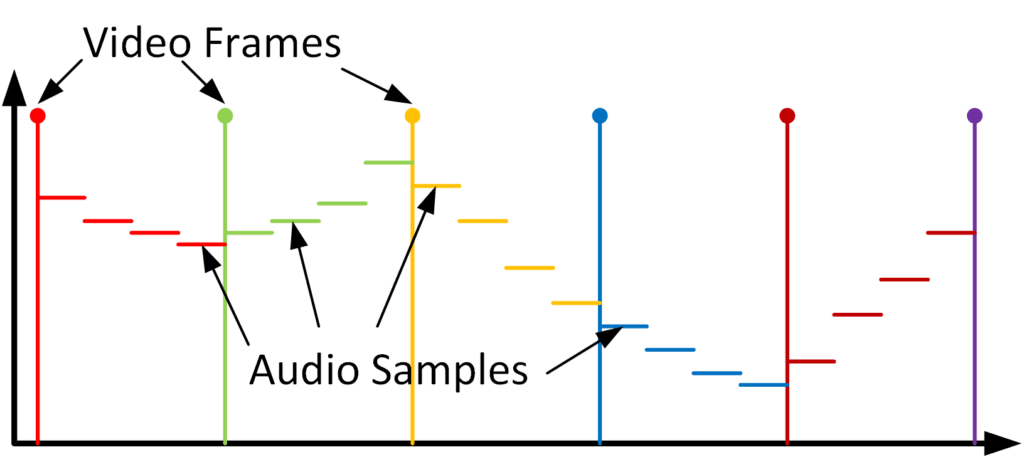

Digital audio and video both consist of discrete-time approximations of a continuous system. A typical recording will have thirty or sixty video frames per second and about forty-four to forty-eight thousand audio samples per second, but there is wide variability of these values based on the capture device and the application. If there are separate capture devices for audio and video, it’s reasonable to envision a case where each device will perform its own encoding and even its own timestamping. We can visualize the audio/video synchronization with an illustration like the following where vertical lines mark video frames and horizontal lines denote audio samples. In real life, there can easily be hundreds of audio samples for every video frame, but for simplicity’s sake, only four are illustrated here. This image represents the video frames (vertical lines) and audio samples (horizontal lines) for a single recording where the same color indicates that the data was collected at the same time. The clock used to timestamp a particular sample is commonly referred to as a media clock, and here’s what the A/V stream would look like if the audio and video capture devices share a media clock:

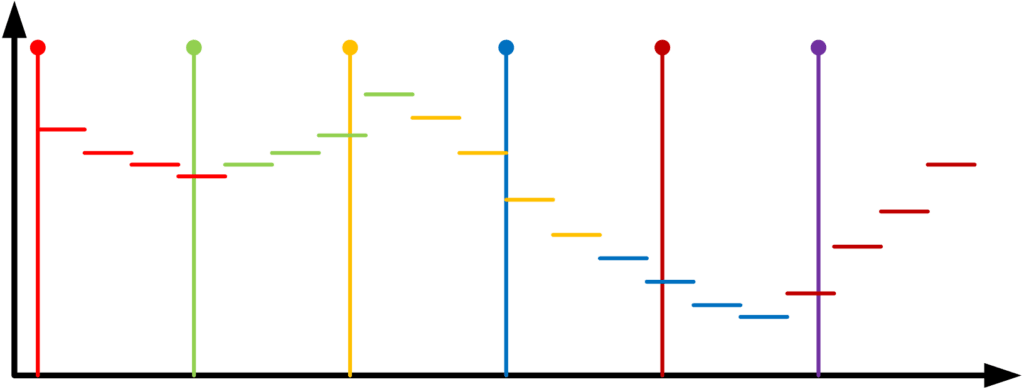

However, when a scenario involves capture devices with separate media clocks, it is unlikely that those separate clocks will march in lockstep. (That kind of synchronization can be obtained using genlock.) Separate media clocks mean that timestamps on a video and audio frame, captured at the same time, will drift apart over time like in this image:

Due to the different capture rates of the camera and microphone, the playback is not synchronized. So now we know why synchronization is so important. On Friday, I’ll talk about how to adjust both audio and video accordingly in order to eliminate sync issues.