Audio is an integral part of many systems, but unfortunately it is often corrupted with noise. This is especially true in systems that use a microphone in a noisy environment, such as automobiles or a room with a fan.

is an integral part of many systems, but unfortunately it is often corrupted with noise. This is especially true in systems that use a microphone in a noisy environment, such as automobiles or a room with a fan.

The Challenge to Reduce Noise Through Signal Processing

One of our clients confronted a particularly noisy environment and asked us to find a computationally tractable way to reduce the noise through signal processing but to do so with minimum non-recurring engineering (NRE) costs. The overall system needed to perform in real-time and the algorithm couldn’t hog the CPU.

With these requirements in mind we gathered multiple audio recordings from the client’s deployment environment and analyzed their characteristics. Since the audio of interest contained only intermittent voice, there were periods where no voice was present at all, and the background noise level could be adaptively estimated. A real-time spectrograph allowed the noise estimation to be done on a band-by-band basis. Not surprisingly, given the client’s particular audio environment, some bands were noisier than others.

The Adaptive Noise Removal Solution

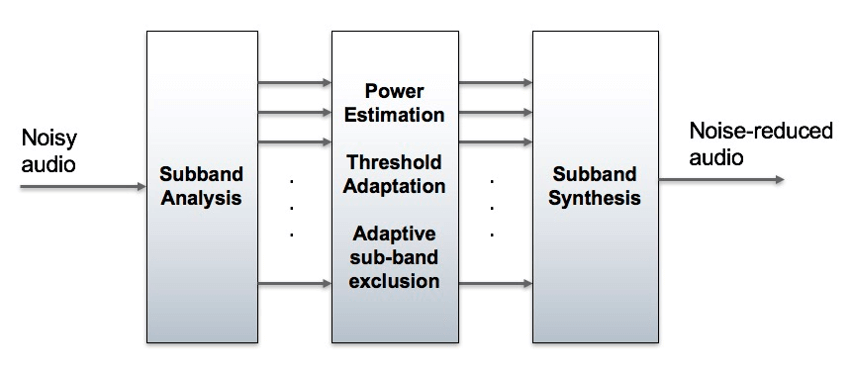

After considering several alternatives, we settled on an adaptive technique for noise removal that works in the frequency domain. The fundamental signal processing chain involved the following steps:

- Analyze the audio signal in several frequency bands

- Maintain adaptive estimates of the noise power in each band; derive from these estimates adaptive thresholds for inclusion/exclusion of each band in the current audio output

- On a block-by-block basis, include/exclude bands by comparing their current power level to the adaptive thresholds

- Synthesize the sub-band filtered audio back into an audio waveform

In a noisy environment, our adaptive noise removal algorithm significantly enhances voice clarity while maintaining real-time performance without straining the CPU.

To perform the sub-band analysis, we ultimately decided to use the same critically sampled filter bank employed by the MP3 audio compression standard. This filter bank was a good choice because:

- It has readily available computationally efficient implementations

- A perfect reconstruction analysis/synthesis filter bank is not required for noise removal applications as the original signal is relatively low-quality to begin with

- The MP3 filter bank has excellent resistance to artifacts introduced by zeroing out some sub-bands. This isn’t surprising given that MP3 compression itself introduces quantization noise in sub-bands and must be robust against distortions during synthesis.

The diagram below shows the basic processing architecture.

Basic Signal Processing Architecture

The Resulting Algorithm Performance

The algorithm was quick to develop and relatively easy to tune. It was also computationally efficient given the design choices we made. Overall it performs quite well, while avoiding the computational overhead of more sophisticated algorithms. The client was extremely happy with both the performance and the NRE required to develop, tune, and test the algorithm.

If you are looking for digital signal processing services, reach out to our team to discuss your product and get estimates for timeline and budget.

For more information on boosting signal quality and reducing noise with digital signal processing, check out our blog posts: