A global ICT services leader operating in 26 countries faced a common hurdle in product development: transforming a promising AI capability into a reliable, real-world system. They needed to replace manual employee verification with an automated biometric solution capable of delivering a frictionless, sub-second user experience while integrating with varied physical hardware across thousands of global locations. They partnered with Cardinal Peak/FPT for our expertise in engineering embedded biometric systems to develop a complete edge-based integration layer.

A global ICT services leader operating in 26 countries faced a common hurdle in product development: transforming a promising AI capability into a reliable, real-world system. They needed to replace manual employee verification with an automated biometric solution capable of delivering a frictionless, sub-second user experience while integrating with varied physical hardware across thousands of global locations. They partnered with Cardinal Peak/FPT for our expertise in engineering embedded biometric systems to develop a complete edge-based integration layer.

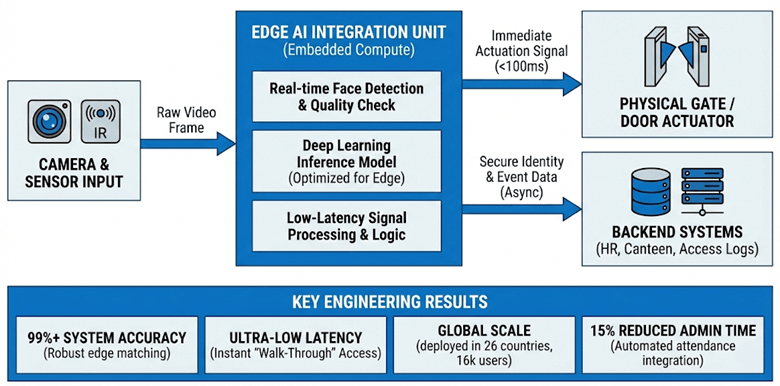

We didn’t just deploy a model; we engineered a complete edge-based integration layer. By optimizing facial recognition for edge compute and developing robust interfaces for physical security gates and backend systems, we delivered a solution that achieves an accuracy level exceeding 99% with instant response times, now serving over 16,000 employees worldwide.

The Challenge: The Engineering Reality of “Instant” Access

Any engineer building a user-facing hardware product knows that latency kills the experience; a security gate that takes two seconds to open is a failed product . We engineered a system to solve three core technical challenges:

- Edge Latency Limitations: Cloud-based facial recognition would introduce unacceptable network lag. Inference had to happen at the edge, instantly.

- Complex Hardware Integration: The AI system needed reliable, low-level communication with physical actuators in security gates and legacy canteen point-of-sale protocols.

- Global Scalability: The solution had to perform consistently for 16,000 users across diverse environments in 26 countries without requiring constant on-site support.

In high-scale hardware products, latency isn’t just a metric; it’s the difference between a seamless user journey and a bottleneck. We didn’t just deploy a model; we engineered the embedded stack to make ‘instant’ a physical reality at the edge.

The Engineering Solution: Optimizing the Embedded Stack

We delivered a high-performance embedded integration layer that bridges the gap between visual AI and physical hardware.

High-Performance Edge AI Inference

We engineered the system to run facial recognition models directly on edge devices located at the access points. By optimizing the model for the target hardware platform, we eliminated network latency, ensuring that the “recognition-to-action” loop happens in milliseconds for a frictionless “walk-through” experience.

Robust Hardware & System Integration

This was the core of the embedded engineering effort. We developed the necessary middleware and APIs to translate the AI model’s output into immediate actions:

- Physical Gate Control: Sending low-latency signals to security turnstiles and door actuators for immediate entry.

- Backend Synchronization: Integrating real-time identity data with automated time-attendance and canteen management systems, removing manual data entry from the loop.

Scalable eKYC Foundation

Leveraging our experience with banking-grade eKYC (Electronic Know Your Customer) platforms, we ensured the biometric enrollment and matching process was secure, accurate (99%+), and capable of handling a database of 16,000 global users without performance degradation.

Embedded Biometric Integration Architecture

The Results: A Frictionless, Scalable Product Experience

The engineered embedded system successfully transformed a slow manual process into a seamless, automated user experience.

- 99%+ System Accuracy: Optimized edge models deliver high-precision matching in real-world conditions.

- Ultra-Low Latency: Achieved instant recognition and gate actuation, eliminating user wait times.

- Proven Global Scale: The robust embedded architecture is successfully deployed across 26 countries for 16,000 users.

- Measurable Efficiency: The automated system drove a 10% reduction in personnel costs and a 15% reduction in time spent on attendance management.

We engineered a complete, multi-layer embedded solution designed for speed and reliability.

Edge AI Layer

Custom-trained facial detection and recognition models optimized for low-power embedded inference engines.

Embedded Software Layer

Real-time C/C++ application logic handling sensor input, model execution, and low-latency decision-making.

Hardware Interface Layer

Custom middleware and drivers developed to communicate reliable actuation signals to varied physical security gate hardware.

System Integration Layer

Secure, asynchronous APIs syncing identity data with backend ERPs without blocking gate operations.

The Engine Behind the Solution

This project was executed by utilizing our expertise in Edge AI, Embedded Software, and Hardware Integration. Our ability to optimize AI models for speed and connect them reliably to physical world devices makes us the ideal partner for engineering embedded products.

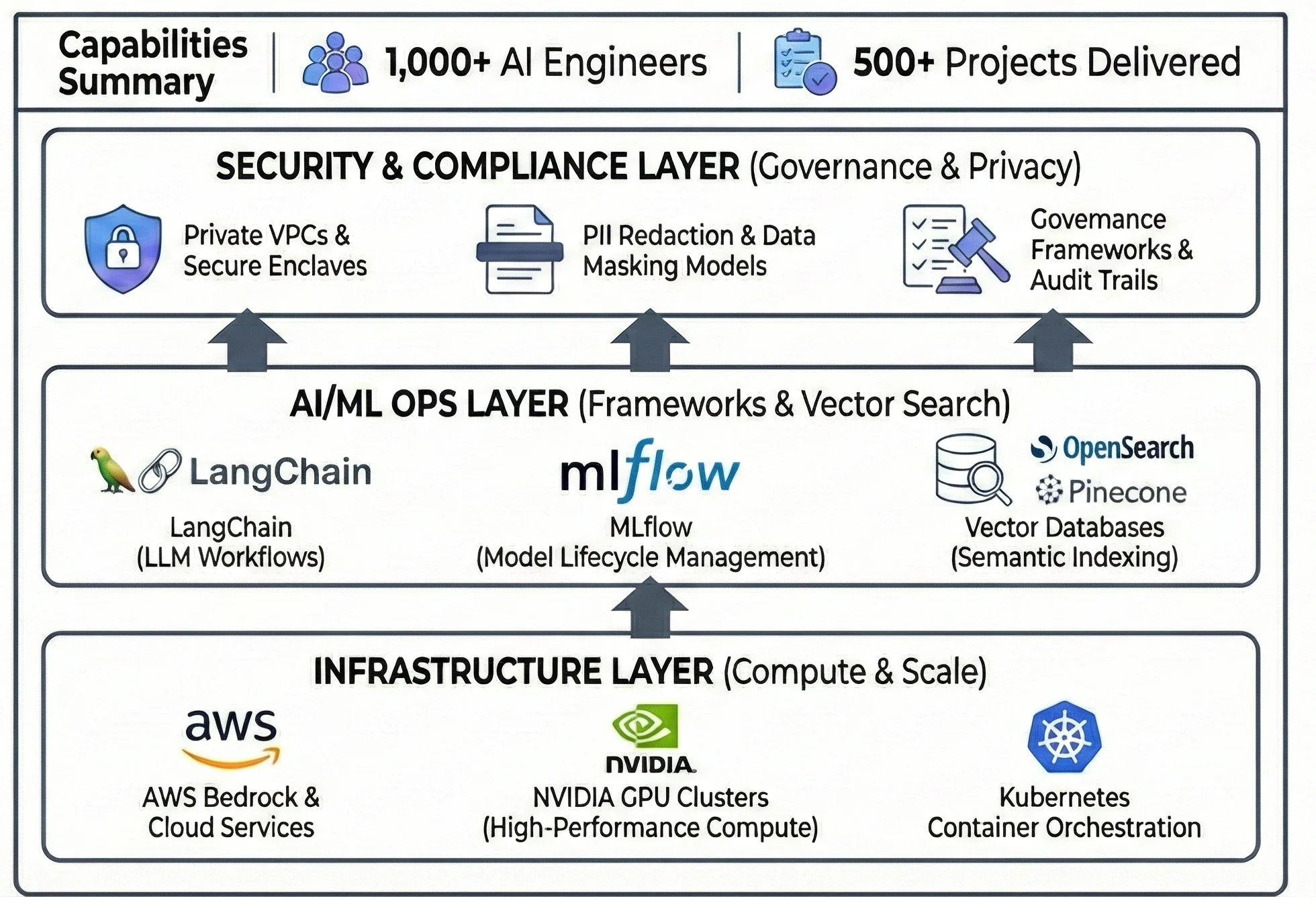

Our AI CoE Capabilities

Explore Embedded AI Engineering Related Solutions

Edge AI Services

Discover how we optimize complex deep learning models to run with ultra-low latency on constrained edge hardware.

Custom AI Algorithms Accelerator

Learn about our frameworks for building and tuning custom vision models for specific embedded applications.

Embedded AI Engineering Frequently Asked Questions

Why is "edge-based" processing critical for biometric access products?

Latency is the enemy of user experience. If a security gate has to wait for a server in the cloud to process an image, users get frustrated. Edge-based facial recognition integration means the AI inference happens instantly on the device itself, providing the sub-second response time necessary for a frictionless “flow-through” product experience.

What are the challenges of integrating AI with physical hardware like security gates?

The challenge is bridging the gap between high-level software and low-level hardware. It requires low-latency embedded vision development to ensure that the AI’s “match found” signal is instantly translated into the correct electrical impulse to actuate a motor or solenoid in the gate, reliably, thousands of times a day.

Can high-accuracy models be optimized to run on lower-cost embedded processors?

Yes. A key part of engineering services is model optimization (e.g., quantization, pruning) to fit high-accuracy models onto cost-effective edge compute platforms without significant loss in precision. This is crucial for managing the Bill of Materials (BOM) cost in product development.

How scalable is an embedded biometric solution?

Highly scalable. This specific solution was engineered to support 16,000 users across 26 countries. By processing data at the edge and only syncing necessary identity templates with the backend, the architecture minimizes bandwidth usage and maintains high performance regardless of the number of deployed locations.

What is the role of an AI hardware integration service in product development?

An AI hardware integration service bridges the gap between high-level AI models and low-level physical hardware. As an engineering services firm, Cardinal Peak does not manufacture off-the-shelf products. Instead, we provide the custom embedded software, edge model development, and low-latency middleware required to power a client’s proprietary hardware devices, ensuring the AI intelligence communicates reliably with physical actuators and backend systems.