Just as cloud computing has evolved from a trendy industry buzzword to the en vogue way to store, manage and process data in the last decade, another computing concept — the edge — is about to take off.

With organizations attempting to keep pace with the proliferation of more connected devices, more data and more systems than ever before, as well as applications that require real-time decision-making, the cloud may struggle to keep up. Sure, cloud computing has certainly helped speed up processing by bypassing on-premise solutions, but the move to cloud services means latency is becoming increasingly noticeable.

Consequently, modern organizations require a new strategy for processing data. Enter edge computing.

Contents

What is Edge Computing?

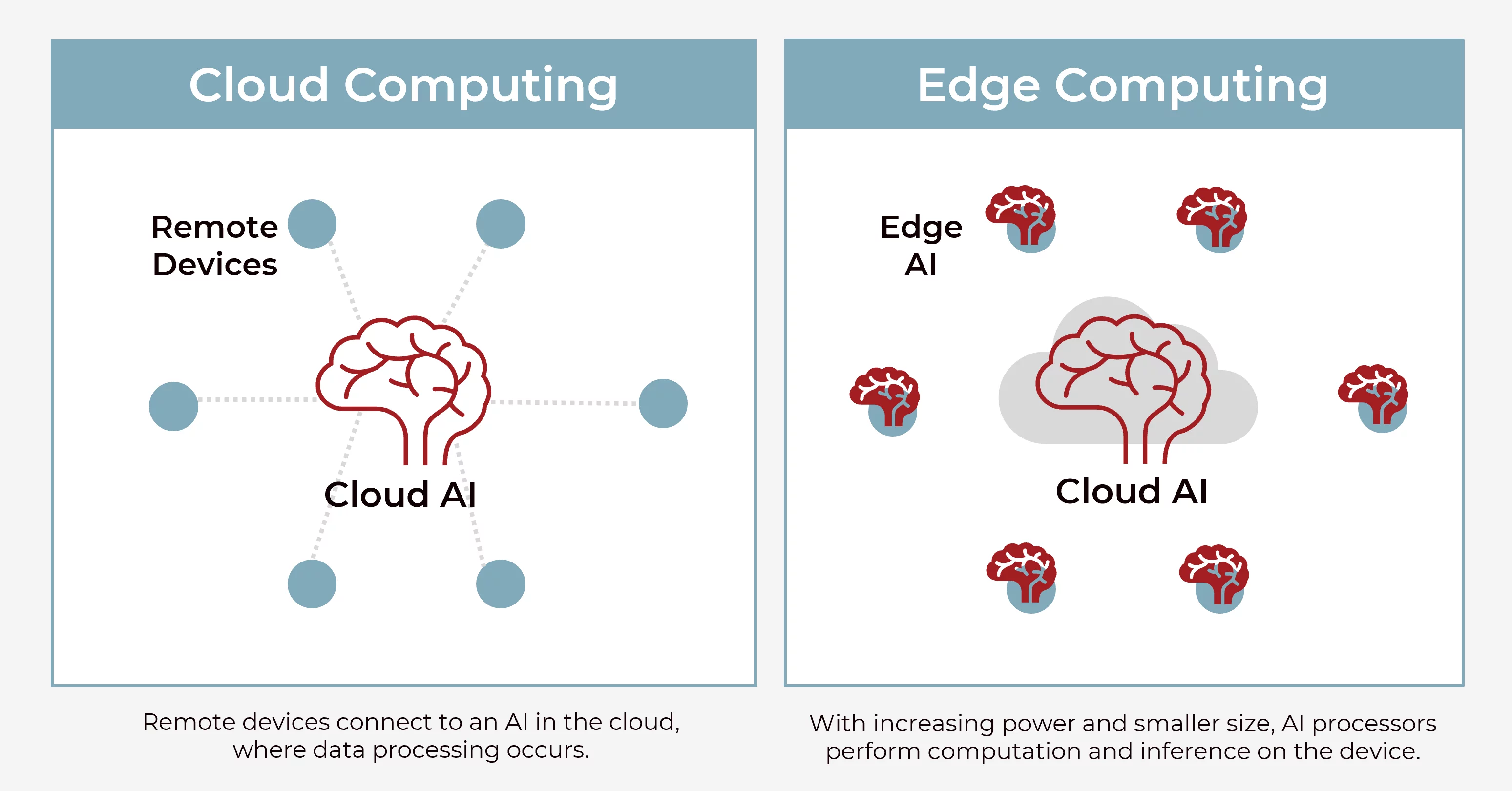

The idea behind edge computing involves placing computing resources closer to the user or the device — at the “edge” of the network. Edge computing is a distributed information technology architecture that involves the deployment of computing and storage resources at the location where data is produced. By moving away from cloud data centers that might be thousands of miles away, edge computing emphasizes reducing latency and providing more processing of data closer to the source of the data.

According to Gartner, more than 75% of enterprise data will be created and processed outside the data center or cloud in just the next five years. With modern businesses drowning in an ocean of data, organizations across industries are beginning to change the way they handle computing.

From bandwidth limitations and latency issues to unpredictable network disruptions and the cost associated with high volumes of transactions in the cloud, the traditional computing model built on a centralized data center and everyday internet isn’t well suited to managing the seemingly never-ending deluge of real-world data being generated.

To keep up with this shifting paradigm, an increasing number of organizations are moving their computing power closer to where data is generated to reduce network congestion and latency — and ultimately to extract the maximum amount of value from their data.

In short, edge computing essentially moves some storage and compute resources out of the centralized data center and closer to the source of the data itself, bringing the cloud to you.

Edge AI and ML

With edge computing, organizations are able to leverage this information to further enhance business outcomes. But since new applications at the edge are continuously producing massive amounts of data — and organizations require real-time responses based on that data — artificial intelligence (AI) and machine learning (ML) solutions are being deployed to allow companies to move processing more efficiently to the edge.

Consider the following example to better understand edge computing. At your home, you might have a security camera that is running a facial recognition system. The camera can continuously stream video frames to the cloud, where the analysis can be performed, necessitating transmitting a large volume of data at a high frequency. With edge computing, on the other hand, instead of the camera being a dumb device that communicates data, it can leverage edge ML to do processing and inference while communicating the predictions in real time.

When AI and ML algorithms are running locally on devices, at the edge of the network, edge AI devices can process data, independently make decisions and generate useful insights when the internet connection is down.

The Differences Between Edge AI and Cloud AI Development

Despite the similarities between edge and cloud computing, there are some development differences of which engineers need to be aware.

In general, when implementing ML/AI — even for the cloud — developers should focus more on the kind of algorithm they’re using and the model structure they’re selecting, considering any compute and resource limitations that could be revealed further down the line. Consequently, it’s always good to plan ahead, considering those limitations in your design and initial discovery instead of building the whole ML pipeline and discovering those limitations along the way.

When you’re developing for the edge, you know what your target devices are — that is, not powerful and not fast. Consequently, when you select your machine learning algorithm and your model size, you have to be very stringent in terms of size and memory utilization. Anytime you’re working on AI and ML algorithms, it’s important to focus on memory, size, performance, and power consumption requirements. While memory, size and performance remain relevant, and important, in the cloud, alleviating those concerns often comes with a high computing price tag. Furthermore, for security and privacy reasons, some applications require data to be stored on-site. Edge AI can be used effectively when designing such applications as the data does not need to leave the premises.

For a connected device, the lifetime cloud cost needs to be calculated into the sales price (or recurring subscription) for that device. By pushing processing to the edge, you may be increasing the bill of material cost for parts, but you are lowering the lifetime cloud cost while improving latency and reliability. Plus, edge devices continue to work well and communicate data even in the case of a poor internet connection.

While the cloud has revolutionized the way we handle data, any computing that can be done at the device level is best handled at the edge for cost, reliability and data privacy. The balance lies in whether transmitting data to the cloud can provide additional value by being combined with other data and is therefore worth the cost.

So, when would an organization opt to leverage edge computing instead of cloud computing?

At the Edge Vs. In the Cloud: Which is Best for You?

Edge AI vs. Cloud AI Tradeoffs

- Cost — The bill of materials cost increases to support edge AI/ML, but with high volumes of transactions in the cloud, the lifetime cloud communication cost for the device increases as well. Which cost will be lower?

- Reliability/Latency — Will the device always be deployed in locations with high-speed internet, or is some fraction of the deployment going to have slow or intermittent connectivity? Edge AI removes the latency involved with any network transfer.

- Communications Networks — Does the network your device utilizes to communicate data carry an incremental cost? Some networks, including cellular networks, do, so developers need to find the right balance. In addition, if you have a network bandwidth-constrained system, you don’t want to be frequently sending data to the cloud because bandwidth is limited or network costs are expensive.

- Data Privacy — Since some applications require data to be stored on-site, would keeping certain data completely on the device increase the available market?

- Power — When the edge sends less data to the cloud, the power drain of network communications is reduced. However, increasing the computational load with ML will increase the power drain, potentially offsetting some of your power savings.

- Storage — With people, machines and “things” expected to generate a staggering 2.5 quintillion bytes of data daily, all of that data needs to be stored somewhere, and edge devices generally lack the space to do so.

By reducing the load on the cloud with edge computing, you’re distributing some of the load from a central server to the device. This opens the door to a new idea of distributed learning, in which a myriad of edge devices can collaboratively train models from local data rather than a centralized training framework.

From smartphones, tablets, smart speakers and wearables to robots, cameras, sensors and other IoT devices, edge AI will find its way into an increasing number of consumer electronic devices and enterprise applications due to its real-time processing advantages. While emphasizing edge or cloud computing isn’t an either-or proposition, incorporating edge computing with centralized cloud computing empowers organizations to maximize the potential of both approaches while minimizing their limitations. Edge AI can be used to run the models that make decisions and take actions, with cloud services continually learning from their performance to provide deeper insights for the enhancement of AI models.

Combining the high-volume data-gathering and reliability possibilities edge computing unlocks with the storage capacity and processing power of the cloud enables organizations to quickly and efficiently run their applications and IoT devices. Even better, they can do so without sacrificing valuable analytical data that could help them to improve their goods and services and drive innovation.

If you’re still struggling to decide whether the cloud or the edge is best for your business, give us a shout! We are not only experts in cloud transformation services, but we also recognize the advantages of running workloads on edge devices and have begun experimenting with building and deploying really big deep learning models on such devices.

Check out our “how to” posts detailing how to build applications with the OpenMV board and the CubeAI tool and reach out today to discuss your project!

Related Articles

- Video: Our Visionary Approach To AI And Connected Solutions For Recurring Revenue

- Smart cat detector case study with edge machine learning and Amazon Kinesis Video Streams