The “internet of things” (IoT) is exploding as connected devices are increasingly embedded in many aspects of our daily lives.

Beyond computers, smartphones and smartwatches, almost anything can be connected to the internet today. From thermostats, lightbulbs and fitness trackers to door locks, refrigerators and even blinds, experts predict there will be 41 billion IoT devices by 2027, with another 127 devices connected every second. That’s a lot of “things.”

With the proliferation of IoT devices, connected device testing has become more important than ever. As is the case with any product — connected or not — it’s always good to thoroughly test it to ensure any issues won’t adversely impact the performance of the rest of the system. Testing brings predictability to the system and reveals any potentially harmful bugs, ensuring the device meets a high standard of quality and the expectations of end users.

The first part of this two-part blog series will highlight a lunch and learn I recently hosted that detailed the methods our QA team utilized to augment manual testing by leveraging technology for improved efficiency in order to test an IoT project on which we were working.

How Can I Automate IoT Testing for a Connected Device?

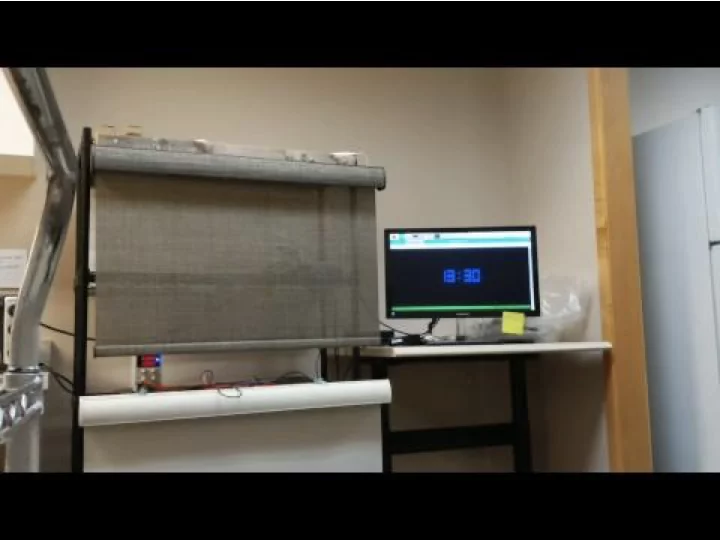

Given the tremendous impact IoT devices are likely to have on the world, the lunch and learn focused on the testing infrastructure we built for testing the product and IoT automation. Having built a sophisticated architecture that involves instrumenting smart blinds, a six-button remote control, and a data collection and analysis system using an iOS/Android application to control the remote, we emphasized ensuring product stability as features were added.

Originally, the blinds weren’t designed to be automated, but we leveraged software to extend the capabilities of the blinds so that they can operate on a schedule. Working with a system that involved a physical sensor that can detect whether the blind is open or closed, tools for data collection and analysis and a front-end database that takes data in, aggregates it and presents it in a useful way comes with myriad challenges:

- The system is difficult to test and prone to human error.

- We had limited visibility into what was actually happening with the blinds because there was one-way communication between the remote and blinds.

- The ability to reproduce issues on scheduled events takes time and is difficult to replicate.

- The smartphone app doesn’t control the blinds, it communicates and controls the blind’s remote control via Bluetooth (BLE). These commands subsequently cause the remote control to issue commands to the blinds via a proprietary protocol on the 2.4GHz band — there is no acknowledgment from the blinds to the phone application that a command was successful.

In order to effectively test the blinds, we set out to leverage technology and off-the-shelf components in order to automate and free up resources so our team could focus on other tasks (i.e., regression tests, feature checking, etc.). Other goals included quantifying changes and trend data over time and ensuring remote firmware changes did not impact the overall performance of the system. Installing a four-pin blind-tracking ultrasonic sensor that tracks the physical state (up or down) of the blinds 24/7 was incredibly helpful. The blind tracker captured remote logs that can be correlated to captured events to create an interactive chart built on cloud infrastructure for easy sharing. The tracking sensor also enabled the ability to overlay predefined schedules for detecting missed schedule events for large data sets.

By automating the data collection portion of this IoT system, we were able to effectively augment the team’s time. Without this setup, we would have been forced to use valuable time and resources to effectively capture this data using a method very prone to human error. But remember to be careful with automation. If a tester spends all of their time trying to develop automated tests and doesn’t do any manual or exploratory tests, they might not necessarily be testing for issues nor have the capability to test certain aspects of the IoT system.

Beyond automation, test engineers and quality assurance (QA) teams constantly face some common internet of things testing challenges that occur anywhere from the start to the end of the development process. Part two of this blog series will dive into those IoT testing challenges and highlight some of the details IoT testers need to take into account in order to deliver a successful product and the best possible user experience.