In today’s fast-paced world, IoT devices generate massive amounts of data but only about 1% of the information is used. As a result, harnessing the power of machine learning is more crucial than ever for businesses across industries. While traditional ML models require substantial computing resources and centralized data processing, a new paradigm — TinyML — has emerged, enabling machine learning capabilities directly at the edge.

In today’s fast-paced world, IoT devices generate massive amounts of data but only about 1% of the information is used. As a result, harnessing the power of machine learning is more crucial than ever for businesses across industries. While traditional ML models require substantial computing resources and centralized data processing, a new paradigm — TinyML — has emerged, enabling machine learning capabilities directly at the edge.

This blog post aims to provide a comprehensive overview of TinyML and its potential applications. We’ll also delve into the benefits of TinyML and discuss some of the tools and technologies available.

Contents

Understanding TinyML

What is TinyML? TinyML, or tiny machine learning, is a fast-growing field of machine learning technologies and applications including hardware, algorithms and software capable of performing on-device sensor data analytics at extremely low latency and low power consumption, typically in the mW range and below. It reduces data bandwidth as no unnecessary raw data is sent across the network. TinyML enabled a variety of always-on use-cases and targets battery operated devices.

The standard consumer CPU consumes between 65 and 85 watts and the standard consumer GPU consumes between 200 and 500 watts, but a typical microcontroller consumes about 1,000 times less power — in the order of milliwatts or microwatts. That low power consumption level enables TinyML devices to operate on battery power alone for weeks, months and even years in some cases.

Comparing Power Consumption of Typical Consumer Devices

| Typical Consumer Device | Power Consumption |

|---|---|

| Central Processing Unit (CPU) | 65 – 85 watts |

| Graphics Processing Unit (GPU) | 200 – 500 watts |

| Microcontroller | 0.2 – 0.5 watts (typically measured in milliwatts or microwatts) |

TinyML models are optimized to efficiently execute complex computations, enabling real-time decision-making at the edge. By empowering edge devices to perform tasks locally, TinyML eliminates the need to transfer data to the cloud for analysis, reducing latency and enhancing privacy. Consequently, leveraging TinyML is ideal for always-on use cases and battery-operated devices.

With TinyML focused on sensor data intelligence, and the cost of sensor technology steadily decreasing, the global edge and embedded AI market is set to explode between now and 2030.

According to Pete Warden, former technical lead of TensorFlow group at Google, TinyML will pervade across industries, impacting seemingly every sector:

- Retail

- Healthcare

- Wellness

- Fitness

- Transportation

- Agriculture

- Manufacturing and industry

- Smart homes

- Environment and sustainability

- Consumer electronics

TinyML is gaining a lot of industry attention — and for a good reason! Combining deep learning and embedded systems, TinyML delivers numerous advantages.

TinyML Benefits

As mentioned above, beyond requiring significantly less power than traditional machine learning approaches, the benefits of TinyML include low latency, reduced bandwidth and enhanced privacy and security.

- Low Latency: TinyML brings intelligence closer to the data source, enabling faster response times and reducing reliance on cloud-based processing and data analysis. TinyML’s lower-latency output is crucial for time-sensitive applications that require real-time decision-making.

- Improved Energy Efficiency: Optimized for edge devices with limited computational resources and power constraints, TinyML models perform ML computations locally, reducing the need for constant data transmission, optimizing energy consumption and extending the device’s battery life.

- Reduced Bandwidth: Transmitting raw sensor data to the cloud for processing is expensive and strains network bandwidth. With TinyML, edge devices can perform initial data analysis and only transmit relevant information, reducing the amount of data sent to the cloud to reduce bandwidth.

- Enhanced Privacy and Security: With sensitive information maintained and processed in the device rather than in the cloud, TinyML models help ensure your data is not stored in any servers. Plus, securing a device is easier and less costly than securing the entire network.

By leveraging the advantages of TinyML, companies across industries can unlock the full potential of connected edge devices, optimize their operations and deliver smarter, more efficient and cost effective solutions.

TinyML Applications

Beyond the well-known examples of TinyML use cases in activity trackers (like Apple, Samsung, Google or Garmin smart watches) and Alexa or Google smart speaker devices, other TinyML application examples follow.

Equipment Maintenance

TinyML enables edge devices to analyze sensor data in real time, detecting anomalies or patterns indicative of potential failures. Continuously monitoring equipment health indicators, such as vibrations, temperature, pressure or power consumption, TinyML models can identify early signs of equipment degradation, wear or misalignment and provide decision-makers with accurate information to predict when equipment will fail or require maintenance.

Traditional maintenance approaches may lead to unnecessary maintenance actions or false alarms, wasting valuable resources and time. TinyML models can be trained to differentiate between normal variations in equipment behavior and actual impending failure indicators. Plus, with remote monitoring and diagnostics, TinyML can reduce the need for physical presence at the equipment location and enable faster response times. By predicting maintenance needs ahead of time, companies can optimize maintenance schedules, minimize downtime and reduce costs.

Quality Control

Utilized in processes to monitor and analyze products in real time, TinyML can help identify variations during production processes to improve quality control and reduce waste. By deploying TinyML models on edge devices within the production line, companies can automate the inspection and sorting process of manufactured goods, accurately differentiating between normal variations in product quality and actual defects, reducing false positives and allowing for more precise and reliable quality control.

TinyML also enables immediate feedback on product quality during manufacturing processes so that if a deviation or defect is detected, the edge device can trigger real-time adjustments or alerts to operators, enabling them to take prompt corrective actions.

Integrating TinyML into edge devices ensures that quality control processes are continuously monitored and improved over time. By analyzing the collected data, companies can identify patterns, correlations or root causes of quality issues to optimize processes and enhance the overall product quality.

Gesture Recognition and Human-Computer Interaction

TinyML plays a crucial role in enabling gesture recognition and enhancing human-computer interaction (HCI). TinyML enables real-time sensor data analysis, allowing for intuitive and interactive experiences that help with gesture recognition and HCI.

By training machine learning models on gesture data sets, TinyML can identify specific hand movements, body postures or facial expressions, enabling gesture-based control and interaction with various devices or applications, such as gaming, virtual reality, augmented reality, robotics, smart home and automotive.

With TinyML, gesture recognition can be performed locally on the edge device, reducing latency to ensure an instantaneous response and seamless user experience. TinyML also enables personalized gesture recognition by allowing users to control user interfaces or manipulate virtual objects with their own gestures or preferences.

Energy Optimization

TinyML enables intelligent decision-making at the edge, reducing energy consumption and optimizing the efficiency of devices and systems.

Localized processing minimizes the need for constant data transfer, reducing energy consumption associated with data transmission and cloud processing. TinyML devices can analyze sensor data and energy usage patterns in real time to help drive intelligent decision-making around optimal energy consumption. These actions include dynamically adjusting device settings, turning off idle components or activating power-saving modes to diminish energy waste.

This is especially valuable in smart homes and building automation systems where sensor data and user behavior pattern analysis can optimize energy usage for heating, cooling, lighting and appliance control. TinyML-powered energy optimization systems can also provide feedback and insights on energy consumption patterns and performance to enable continuous optimization and improvement of energy efficiency strategies over time, leading to long-term energy savings.

Tools and Technologies for TinyML

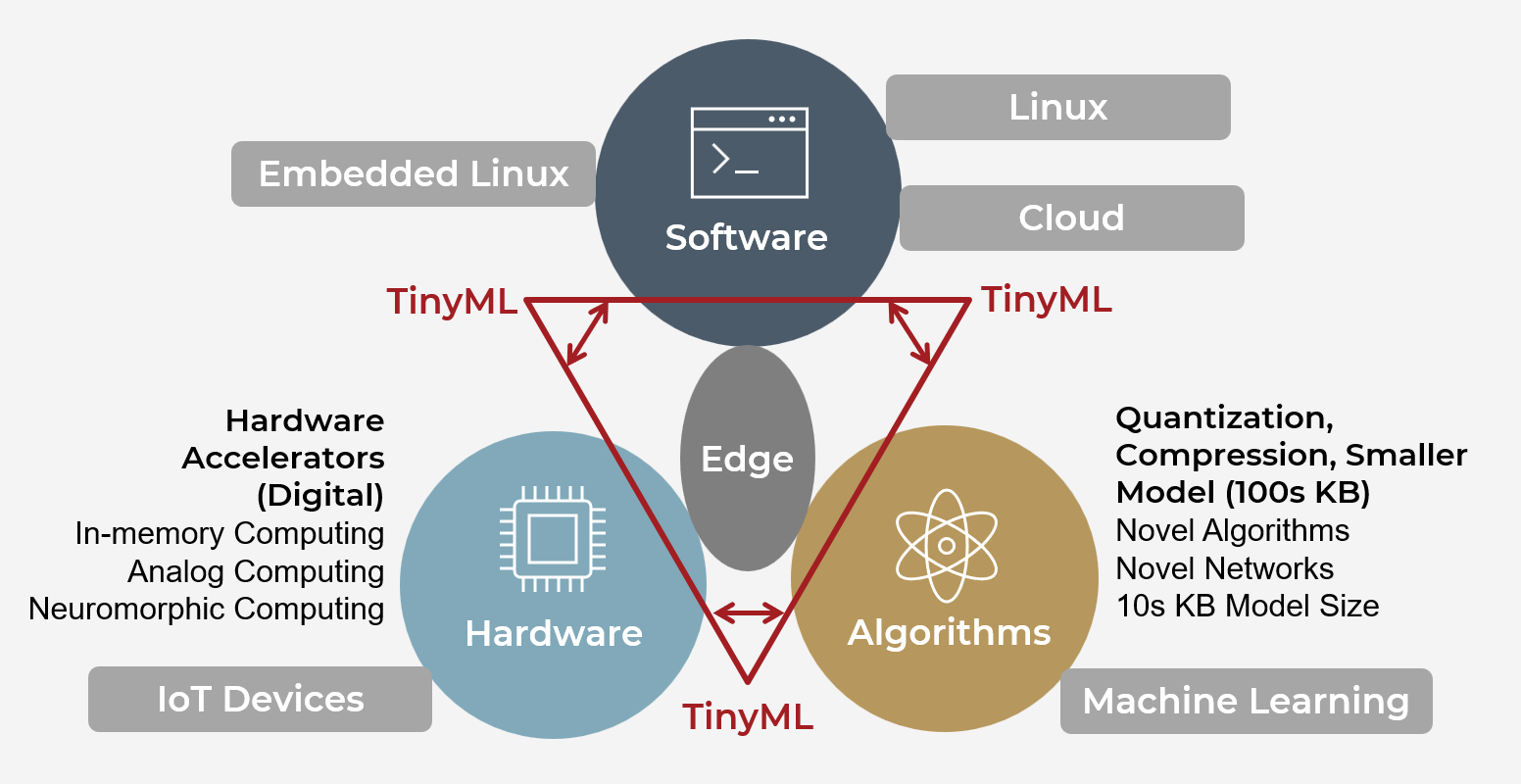

Capable of performing on-device analytics for a variety of sensing modalities, machine learning-aware architectures, frameworks, techniques, tools and approaches — or TinyML — can be envisaged as the composition of three key elements:

- Software

- Hardware

- Algorithms

Structure of TinyML

As with any machine learning process, TinyML starts with analyzing data, and designing and training models on desktop and/or cloud-based software. Depending on hardware platforms and requirements, software to run TinyML models on edge devices varies from bare-bones implementations, to RTOS, to Embedded Linux. The hardware can comprise IoT devices with or without accelerators based on in-memory, analog and neuromorphic computing for a better learning experience. Algorithms for the TinyML system should be novel so that kilobyte-sized models can be deployed in edge devices with limited resources.

Hardware Platforms for TinyML Applications

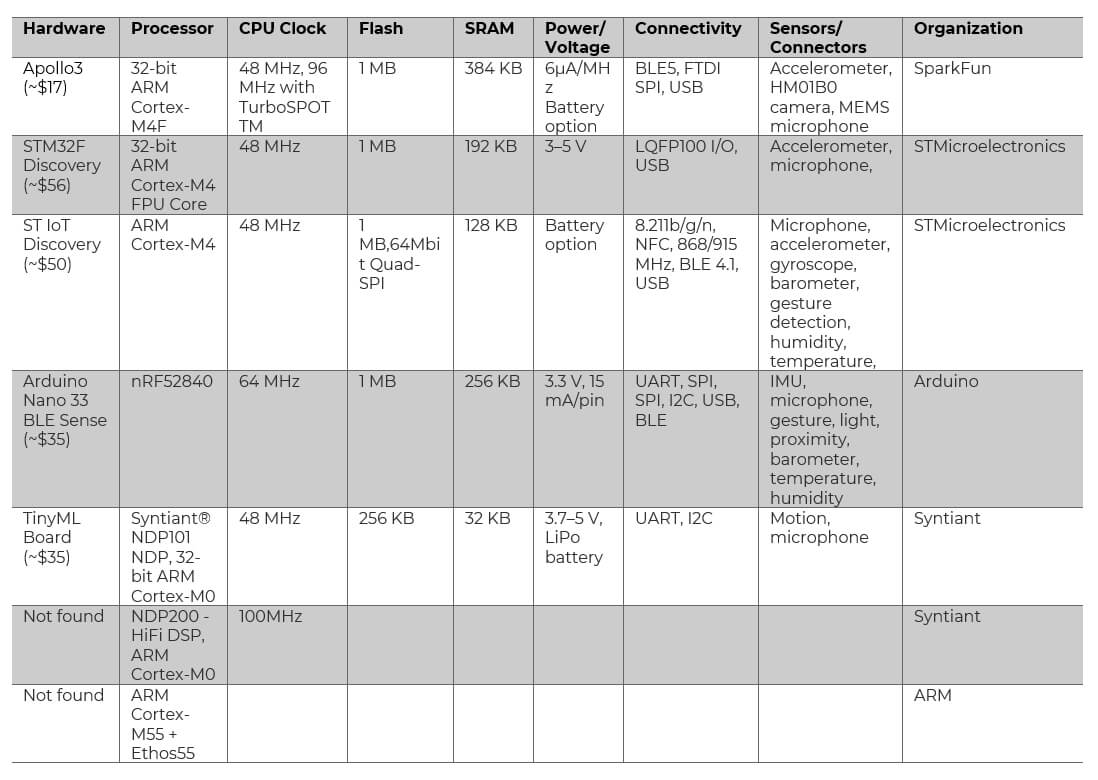

Your choice of hardware platform depends on the specific application requirements including computational requirements, power consumption constraints, cost and available development tools and libraries.

Machine learning tool sets depend on sophisticated hardware chips like GPUs. Today, myriad hardware platforms for AI and ML application development are available. Specific hardware for TinyML applications also is gaining numbers including new dedicated hardware forms such as application specific integrated circuits (ASICs).

The table below compares just a small subset of the starter/learning hardware kits with which you can acquire hands-on experience in TinyML example projects from different hardware developers in terms of processor, CPU clock frequency, flash memory, SRAM size, power consumption, connectivity, sensors or connectors.

Select Hardware Platforms for TinyML Applications

Software Frameworks and Libraries Commonly Used for TinyML

Software frameworks and libraries provide the necessary tools and resources to train, deploy and optimize edge device machine learning models. Some popular software frameworks exist to simplify the deployment of TinyML models, whether offering pretrained models, data preprocessing tools, optimization techniques or some combination thereof to maximize performance on resource-constrained devices.

Let’s discuss three popular software frameworks and libraries commonly used for TinyML application development:

- TensorFlow Lite: An open-source deep learning framework, TensorFlow Lite is a lightweight version of the TensorFlow ecosystem designed for mobile and embedded devices. The framework supports Android, iOS, embedded Linux and a variety of microcontrollers and different languages (C++, Python, Java, Swift, Objective-C), allowing developers to deploy models with a minimal footprint.

- PyTorch Mobile: Part of the popular deep learning framework ecosystem, PyTorch Mobile aims to support all phases, from training machine learning models to deploying them to smartphones. Several APIs are available to preprocess machine learning in mobile applications, and PyTorch Mobile currently supports image segmentation, object detection, video processing, speech recognition and question-answering tasks.

- Edge Impulse: A cloud service for developing machine learning models in TinyML-targeted edge devices, Edge Impulse is an end-to-end development platform specifically designed for TinyML applications. Supporting AutoML processing for edge platforms and numerous boards (including smartphones), Edge Impulse trains models in the cloud, and the trained model can be exported to an edge device by following a data forwarder-enabled path.

These software frameworks and libraries provide the tools, APIs and optimizations necessary to efficiently develop and deploy TinyML models on edge devices. Developers should consider the target hardware platform, development preferences, and compatibility with existing models or workflows to choose the best framework to develop their TinyML application.

Lightweight Algorithms for TinyML

Traditional machine learning algorithms are slow and require significant resources, including memory and CPU/GPU. Designed to run efficiently on microcontrollers with limited memory and processing capabilities, TinyML models typically utilize lightweight algorithms with reduced complexity and optimized models.

However, some common approaches to optimization for TinyML include the following:

- Quantization: The process of constraining an input from a continuous or otherwise large set of values (e.g., real numbers) to a discrete set (e.g., integers).

- Knowledge Distillation: A method of extracting knowledge from a larger deep neural network into a small network.

- Pruning: Unstructured pruning deletes connections between neurons, while structured pruning deletes the whole neuron.

- Low-Rank Factorization: This optimization method splits the weight matrix into two vectors.

- Fast Convolution: Instead of calculating the convolution, transform the input into the frequency domain and calculate a multiplication. The filter kernel is pre-transformed.

When selecting algorithms for TinyML application development, consider factors such as model complexity, computational requirements, memory footprint and the availability of optimized implementations.

Reach Our TinyML Experts

Clearly, TinyML offers a transformative approach to building machine learning capabilities directly into connected edge devices. By enabling real-time decision-making, reduced latency, improved privacy and enhanced energy efficiency, TinyML opens up new possibilities for a wide range of applications.

While challenges exist, advancements in hardware, software and optimization techniques continue to drive the adoption and evolution of TinyML. As companies across industries demand the ability to execute ML models on edge devices to enable interactive new use cases, they can leverage TinyML’s potential to gain a competitive edge in their respective industries and unlock innovative solutions to complex problems.

Contact us today to discuss how we can help you integrate machine learning at the edge and unlock new opportunities for your organization. To learn more about our ML services, visit our page on data analytics.

Ready to explore the possibilities of TinyML? Check out these blogs posts:

- How to Optimize Edge Machine Learning Applications Using STMicro’s STM32Cube.AI

- Building Edge ML AI Applications Using the OpenMV Cam

Learning Materials

Websites

Books

- TinyML: Machine Learning with TensorFlow Lite on Arduino and Ultra-Low-Power Microcontrollers

- TinyML Cookbook: Combine artificial intelligence and ultra-low-power embedded devices to make the world smarter

Articles and Videos

- A review on TinyML: State-of-the-art and prospects

- Arm Tech Talk from Neuton.ai: The Next Generation Smart Toothbrush